Lessons:

3. Constructivist epistemology

Home » 6. Cognitive architectures » 62. RI Architecture

There are many inborn brain structures that encode spatial information. For example, Gross & Graziano's (1995) Multiple representations of space in the brain discusses the encoding of spatial knowledge in primates. From these kinds of studies, we conclude that it is reasonable to endow our agent with a hard-coded spatial memory, rather then expecting spatial memory to emerge spontaneously. In so doing, we hard-code presupposed knowledge about the environment, namely, knowledge that the environment has a three-dimensional structure. By making this assumption, we restrict our scope to agents that indeed exist in a three-dimensional world, such as physical robots. We could probably not encode the same assumption with agents that exist in fancy abstract worlds, for example bots that crawl the internet.

To remain consistent with constructivist epistemology, spatial memory should not encode presupposed ontological knowledge about the environment. Recall that the agent never knows which entities "as such" exist in the world, but only knows possibilities of interaction. Accordingly, our spatial memory only encodes the knowledge that certain interactions have been or can be enacted in certain regions of space. Using again the terms experiment and result, the agent knows that, if it performed a certain experiment in a certain region of space, it would obtain a certain result, but the agent does not know the essence of the entity that occupies this region of space.

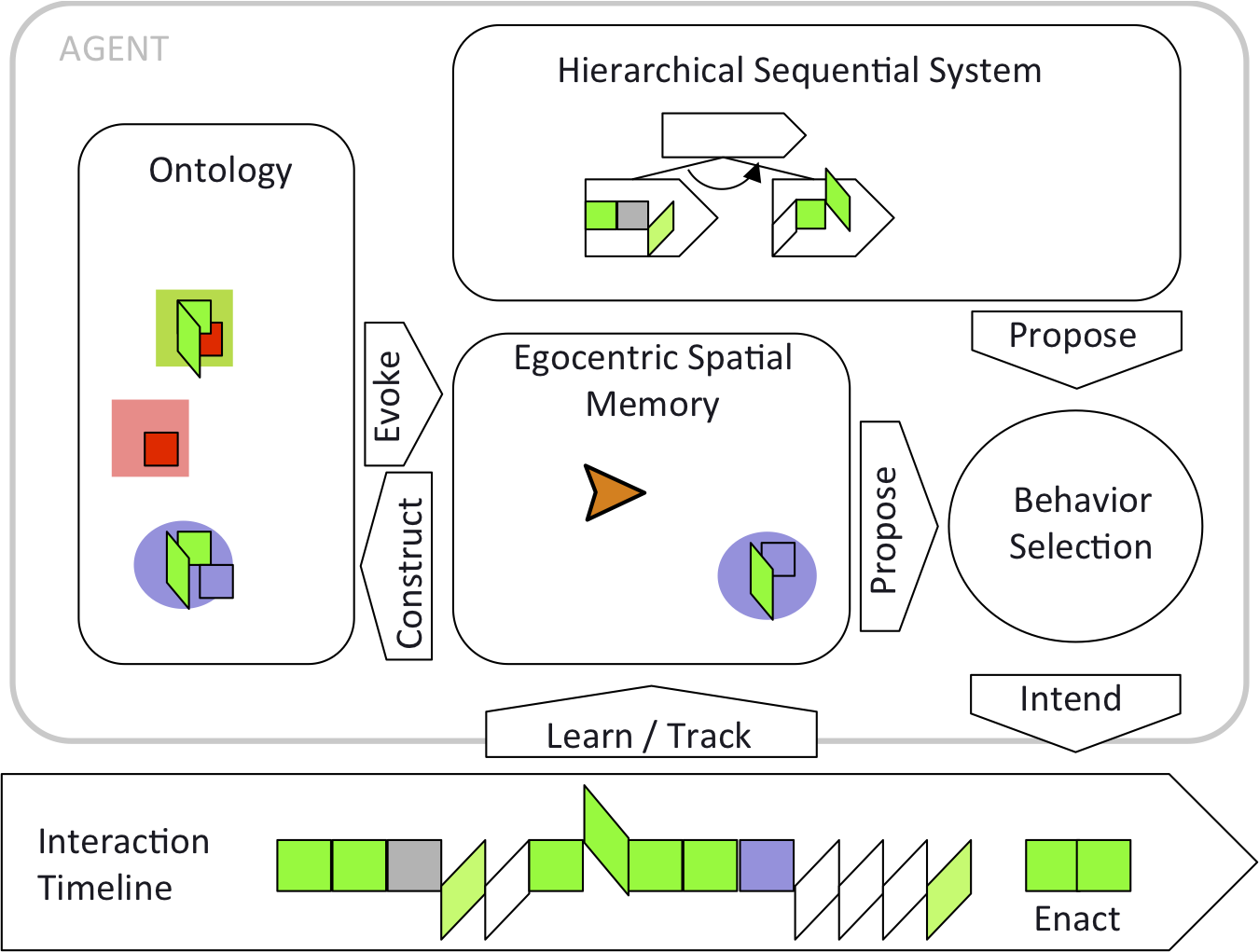

Radical interactionism, introduced in Lesson 5, facilitates the implementation of a constructivist spatial memory. In the RI formalism, spatial memory simply maintains the position of enacted interactions relative to the agent. Figure 62 shows our cognitive architecture implemented with RI.

Figure 62: The Enactive Cognitive Architecture (ECA).

Bottom: the Interaction Timeline shows the stream of interactions enacted over time. Enacted interactions are represented by colored symbols as shown on the bottom line of Figure 42. Here, enacted interactions come from the example in Video 41: green trapezoids represent turning towards a prey, green squares represent stepping towards a prey, blue squares represent eating a prey.

Top: the Sequential System represents the hierarchical sequential regularity learning mechanism that we have been developing thus far.

Center: Spatial Memory keeps track of the position (relative to the agent) of enacted interactions over the short term. The orange arrowhead represents the agent's position at the center of spatial memory, oriented towards the right. The blue square and the green triangle represent interactions that have been enacted in the front right of the agent. When the agent moves, spatial memory is updated to reflect the relative displacement of enacted interactions, as we will develop on the next page. This egocentric spatial memory is inspired by the Superior Colliculus in the mammal's brain.

Spatial memory is only short-term. It is not intended to construct a long-term map of the environment, but only to keep track of the relative positions where interactions have been recently enacted, in order to detect spatial overlap. For example, the spatial overlap between the interactions turning towards a prey (green trapezoid) and eating a prey (blue square) allows the agent to infer that it is the same object that it has seen earlier and then eaten. The agent can thus associate these two interactions in a bundle that represents the category of entities corresponding to preys.

Left: the Ontology mechanism records bundles of interactions based on their spatial overlap observed in spatial memory. For example, the blue circle represents the bundle that represents preys; it gathers the interactions that are afforded by preys. The large red square represents the wall object that the agent can experience through the bump interaction (small red square), but that it cannot see in the example in Video 41.

Once constructed, bundles allow the evocation of types of objects in spatial memory. In turn, evoked types of objects propose the interactions that they afford. For example, over time, the fact of seeing a prey in a certain region of space evokes the eat interaction in this region.

Note that the term Ontology Mechanism does not mean to imply that the agent has access to the ontological essence of entities in its environment. Instead, this term denotes the fact that the agent constructs its own ontology of the world on the basis of its interaction experiences.

Right: Behavior Selection mechanism balances the propositions made by the sequential system and by spatial memory, and then selects the next sequence of interactions to try to enact. For example, if the eat interaction is evoked in spatial memory in front of the agent, then the behavior selection mechanism may select eat as the next intended interaction to try to enact.

See public discussions about this page or start a new discussion by clicking on the Google+ Share button. Please type the #IDEALMOOC062 hashtag in your post: