Lessons:

3. Constructivist epistemology

Home » 2. Sensorimotor paradigm » 22. Interactional motivation

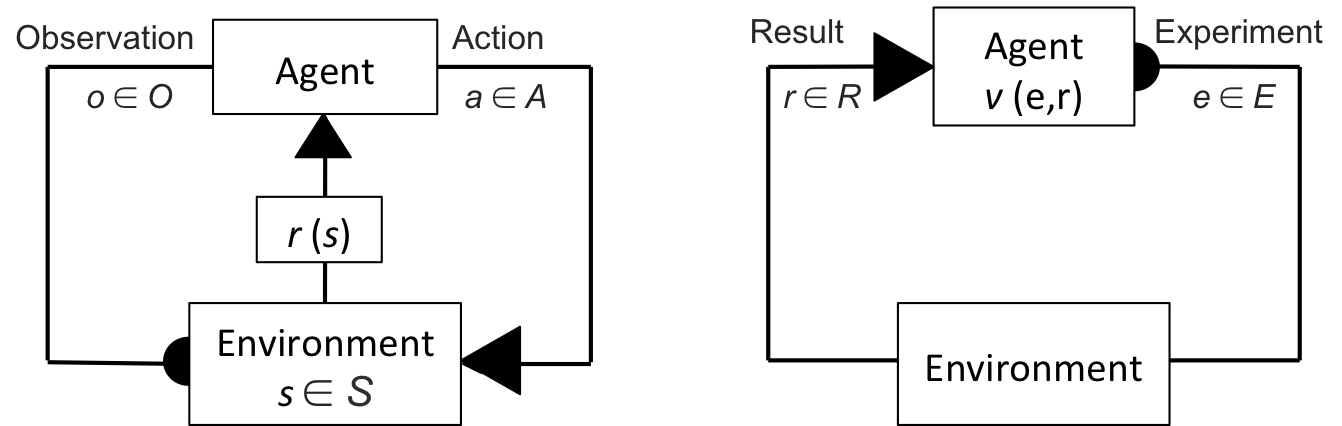

The sensorimotor paradigm allows implementing a type of motivation called interactional motivation. Using the embodied model introduced on Page 12, Figure 22 compares traditional reinforcement learning (left) with interactional motivation (right).

Figure 22: Interactional motivation (right) compared to traditional reinforcement learning (left).

In reinforcement learning (Figure 22/left), the agent receives a reward r that specifies desirable goals to reach. In the case of simulated environments, the designer programs the reward function r(s) as a function of the environment's state. In the case of robots, a "reward button" is pressed either automatically when the robot reaches the goal, or manually by the experimenter to train the robot to reach the goal. The agent's policy is designed to choose actions based on their estimated utility for getting the reward. As a result, to an observer of the agent's behavior, the agent appears motivated to reach the goal defined by the experimenter. For example, to model an agent that seeks food, the designer assigns a positive reward to states of the world in which the agent reaches food.

In interactional motivation (Figure 22/right), the agent has no predefined goal to reach. The environment may even lack a state, as we saw on Page 13 and as we will see again on the next page. However, the agent has predefined interactions that it can enact. The designer may associate a scalar valence v(i) with interactions, and design the agent's policy to try to enact interactions that have a positive valence. To model an agent that seeks food, the designer specifies an interaction corresponding to eating (the experiment of biting with the result of tasting good food) and assigns a positive valence to this interaction. In doing so, the designer predefines the agent's preferences of interaction without predefining which states or entities of the world constitute food.

Overall, the sensorimotor paradigm allows designing self-motivated agents without modeling the world as a predefined set of states. Instead, the agent is left alone to construct its own model of the world through its individual experience of interaction. Since there is no predefined model of the world, the agent is not bound to a predefined set of goals. For example, it can discover/categorize new edible entities in the world.

Interactional motivation is not the only possible motivational drive for sensorimotor agents. Recall that we introduced the drive to learn to predict the result of experiments on Page 14. Importantly, since there is not data in a sensorimotor agent that directly represents the environment's state, sensorimotor agent can hardly be pre-programmed to perform a predefined task. If you want them to perform a predefined task, you will have to train them rather than program them.

Since sensorimotor agents are not programmed to seek predefined goals, we do not assess their learning by measuring their performance in reaching predefined goals, but by demonstrating the emergence of cognitive behaviors through behavioral analysis. We did our first behavior analysis on Page 14, and we will continue doing behavioral analyzes in this course.

See public discussions about this page or start a new discussion by clicking on the Google+ Share button. Please type the #IDEALMOOC022 hashtag in your post: