Delicio is an ongoing research project funded by French Agency ANR, (2019-2023). it proposes fundamental and research in the areas of Machine Learning/IA and process control with applications to drone (UAV) fleet control.

The last years have witnessed the soaring of Machine Learning (ML), which has provided disruptive performance gains in several fields. Apart from undeniable advances in methodology, these gains are often attributed to massive amounts of training data and computing power, which led to breakthroughs in speech recognition, computer vision and natural language processing. In this project, we propose to extend these advances to sequential decision making of multiple agents for planning and control. We particularly target learning realistic behavior with multiple horizons, requiring long-term planning at the same time as short-term fine-grained control.

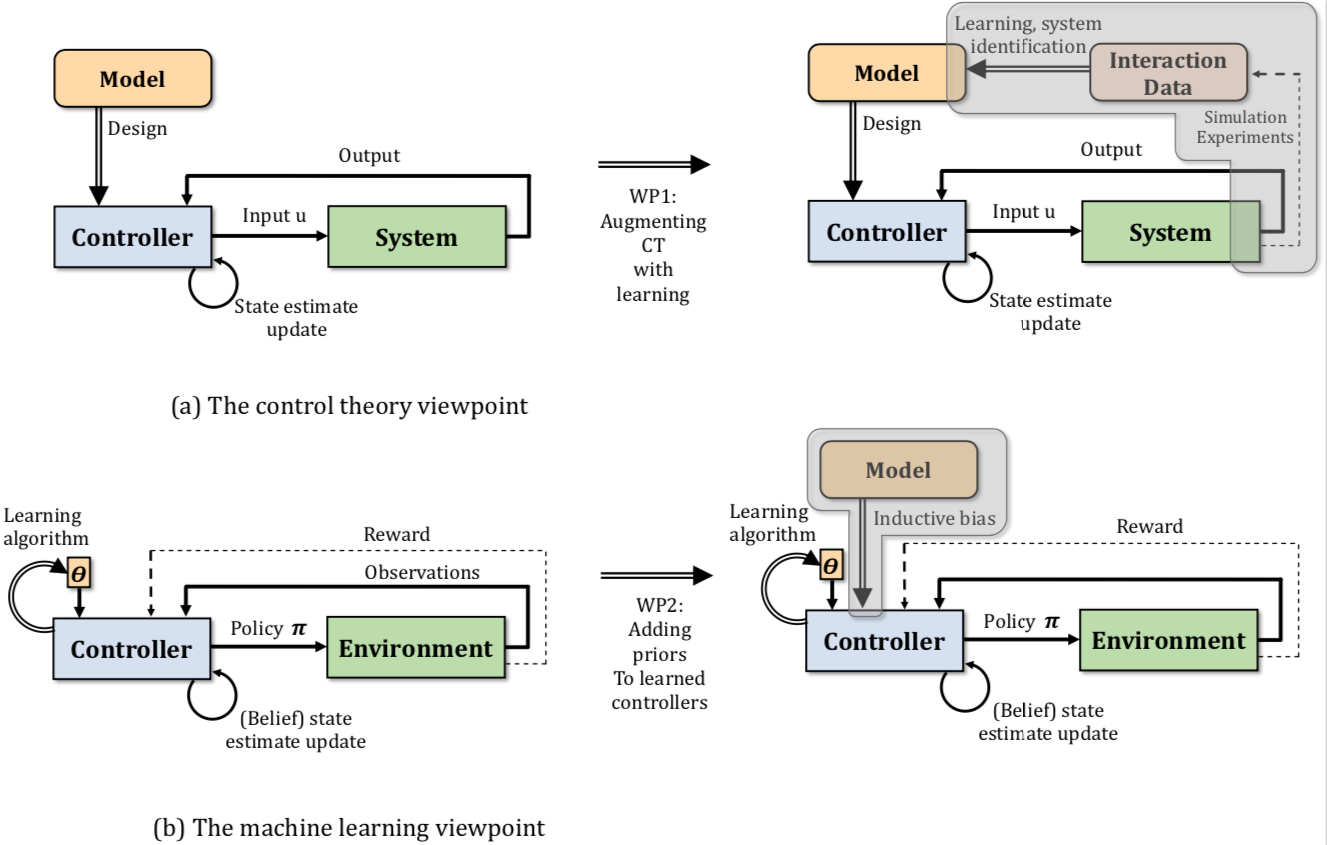

In the context of decentralized control of agents like UAVs, mobile robots etc, DeLiCio proposes fundamental contributions on the crossroads between IA/ML and Control Theory (CT), the second key methodology of this project, together with ML. The two fields, while being distinct, have a long history of interactions between them and as both fields mature, their overlap is more and more evident. CT aims to provide differential model-based approaches to solve stabilization and estimation problems. These model-driven approaches are powerful because they are based on a thorough understanding of the system and can leverage established physical relationships. However, nonlinear models usually need to be simplified and they have difficulty accounting for noisy data and non modeled uncertainties.

Machine Learning, on the other hand, aims at learning complex models from (often large amounts of) data and can provide data-driven models for a wide range of tasks. Markov Decision Processes (MDP) and Reinforcement Learning (RL) have traditionally provided a mathematically founded framework for control applications, where agents are required to learn policies from past interactions with an environment. In recent years, this methodology has been combined with deep neural networks, which play the role of high-capacity function approximators, and model the discrete or continuous policy function or a function of the cumulated reward of the agent, or both.

While in many applications learning has become the prevailing methodology, process control is still a field where control engineering cannot be replaced for many low level control problems, mainly due to lack of stability of learned controllers, and computational complexity in embedded settings.

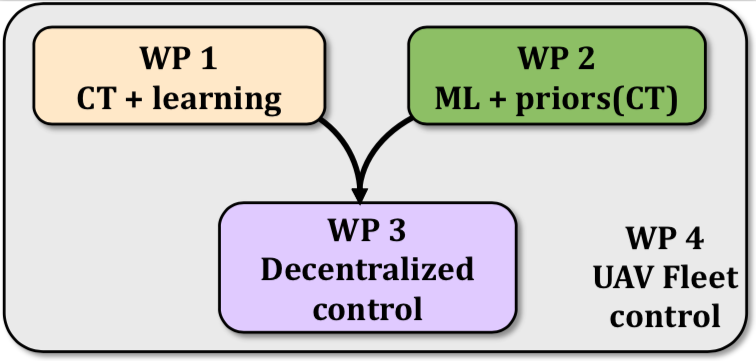

DeLiCio proposes fundamental research on the crossroads of ML/IA and CT with planned algorithmic contributions on the integration of models, prior knowledge and learning in control and the perception action cycle:

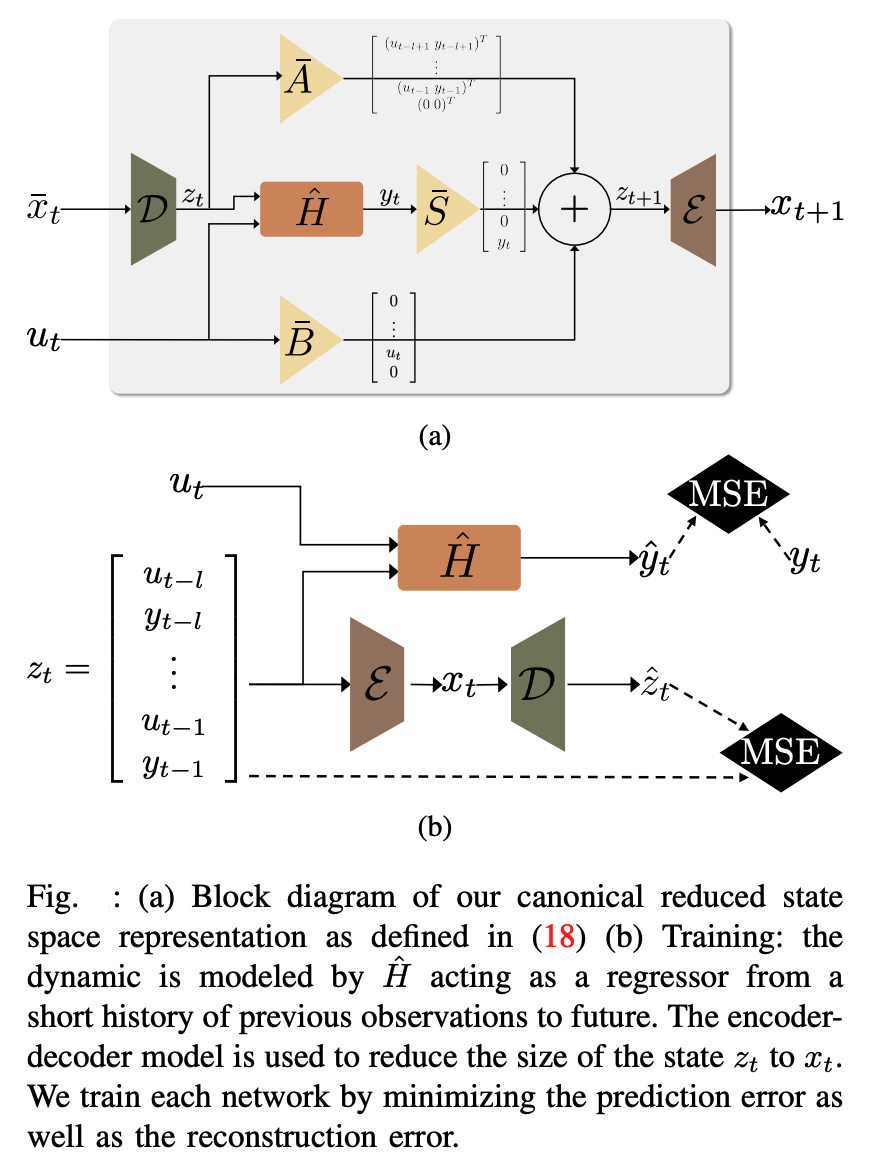

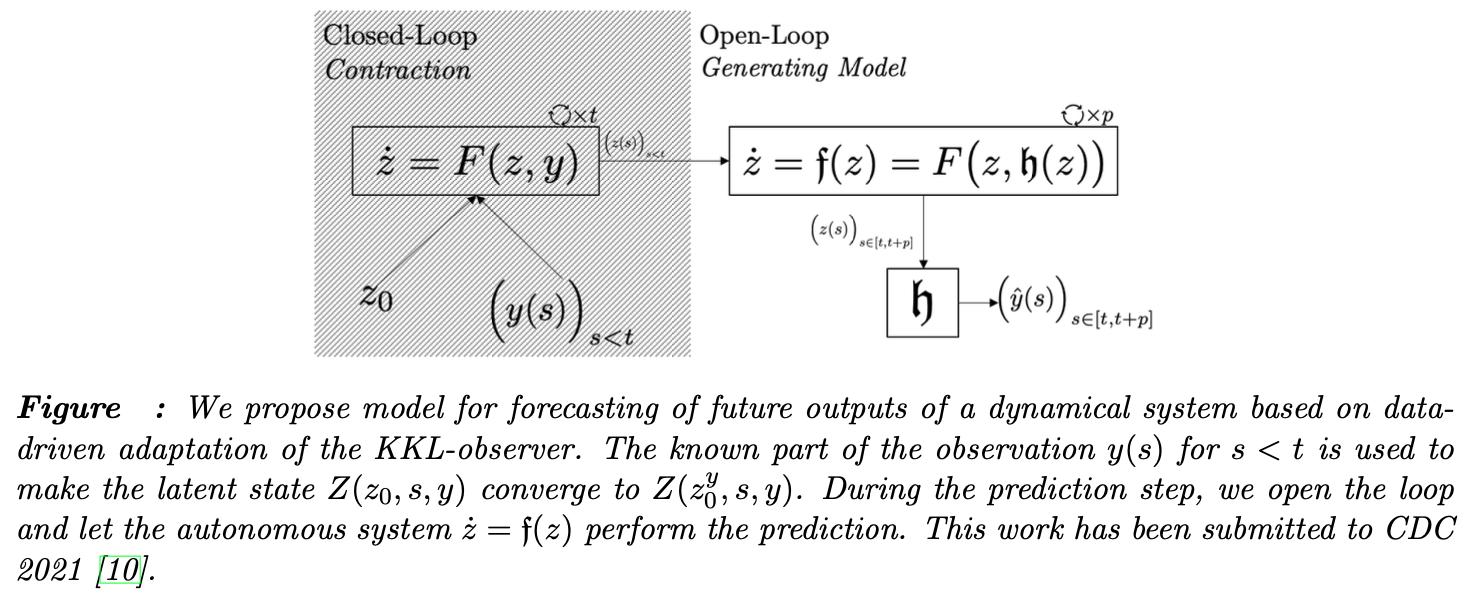

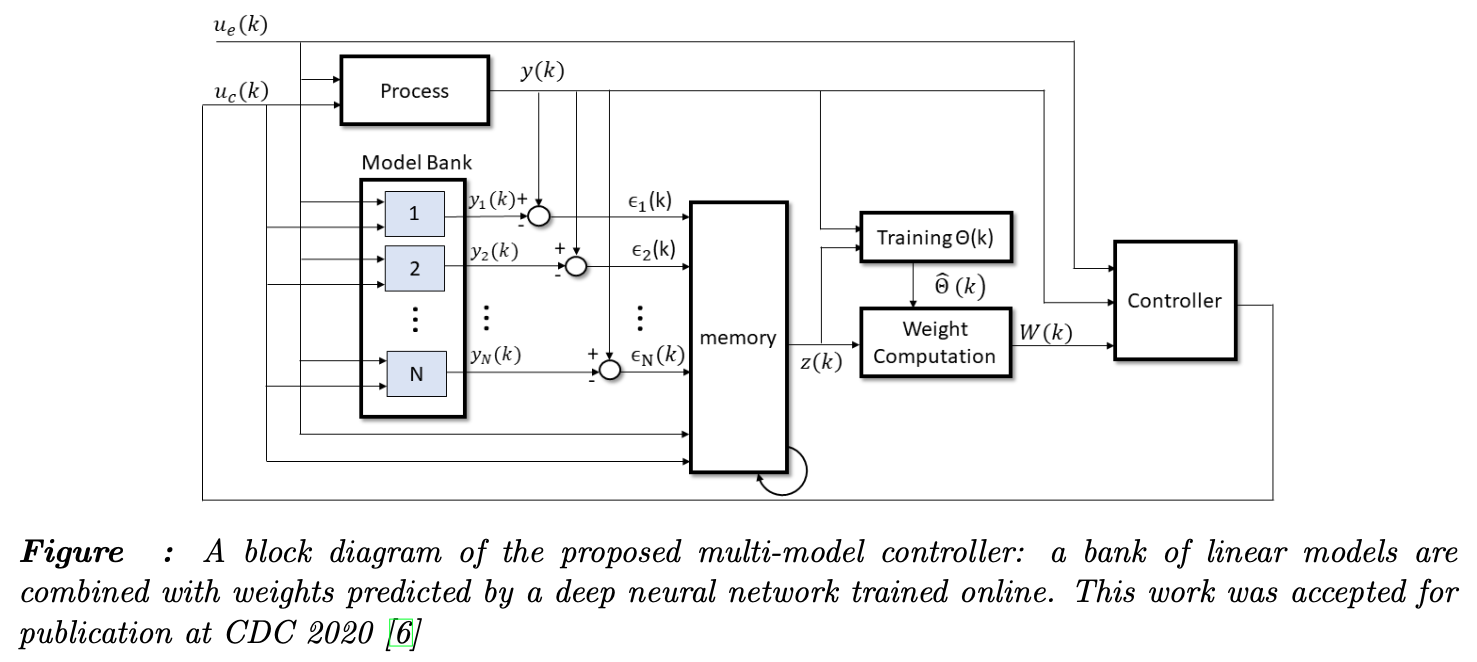

- data-driven learning and identification of physical models for control;

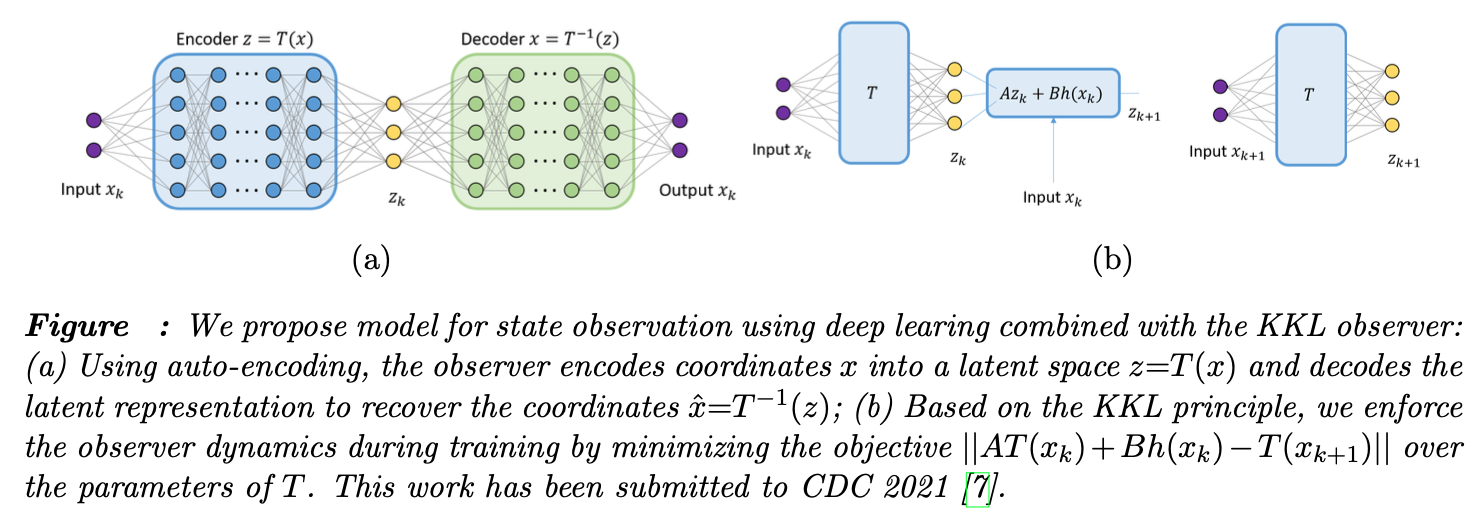

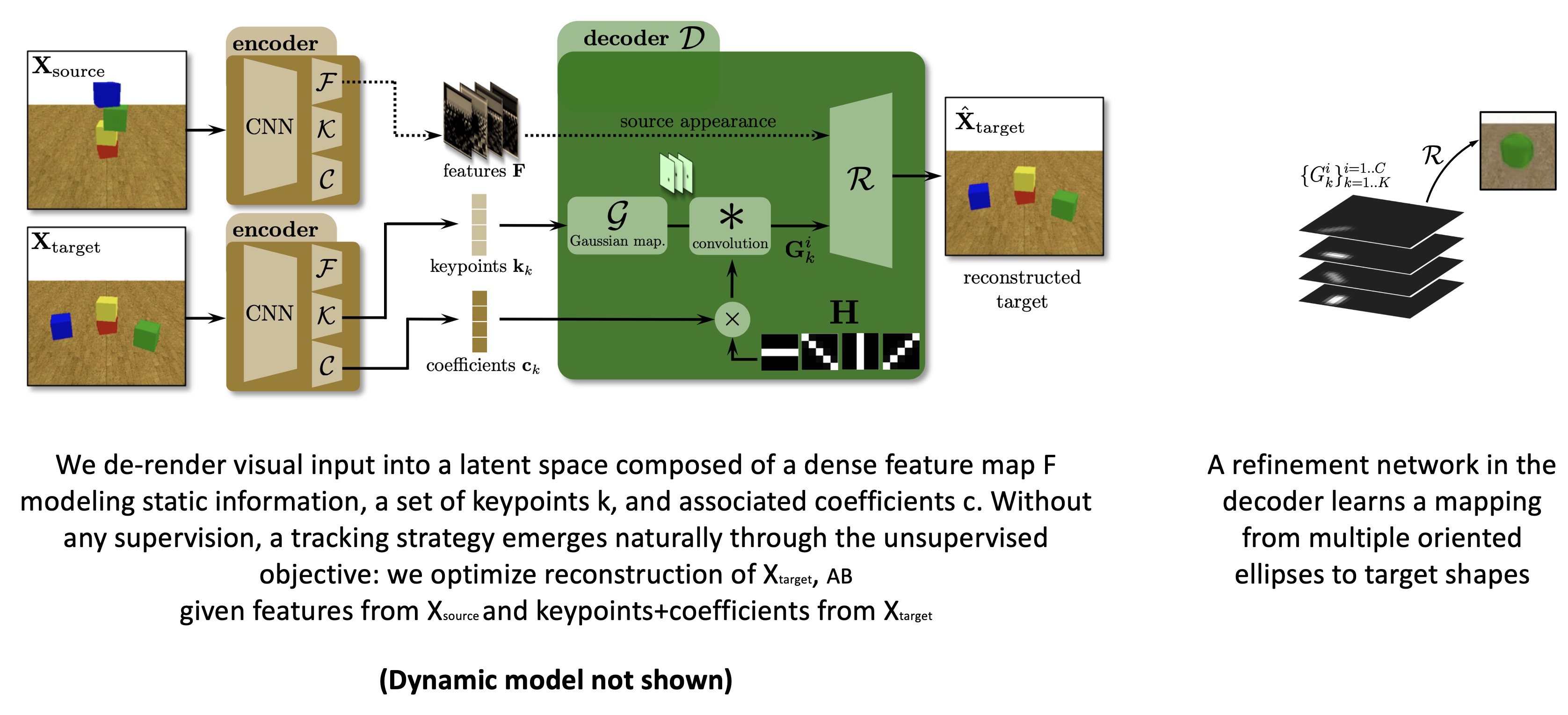

- state representation learning for control;

- stability and robustness priors for reinforcement learning;

- stable decentralized (multi-agent) control using ML and CT.

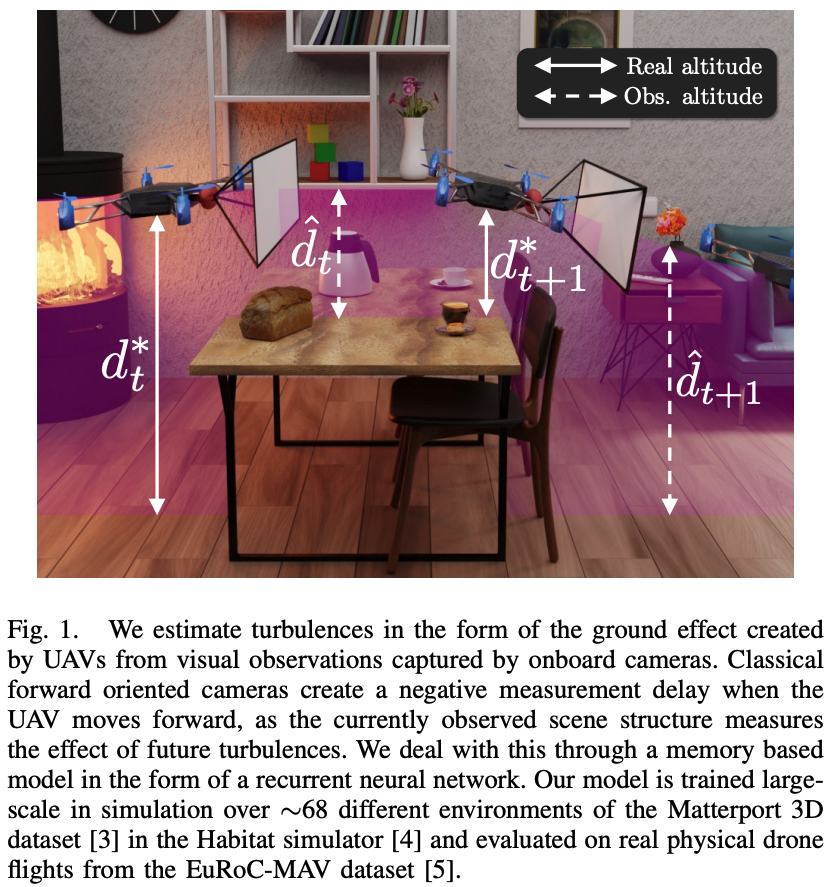

The planned methodological advances of this project will be evaluated on a challenging application requiring planning as well as fine-grained control, namely the synchronization of a UAV swarm through learning. The objective is to learn strategies, which allow a swarm to solve a high-level goal (navigation, visual search) while at the same time maintaining a formation.