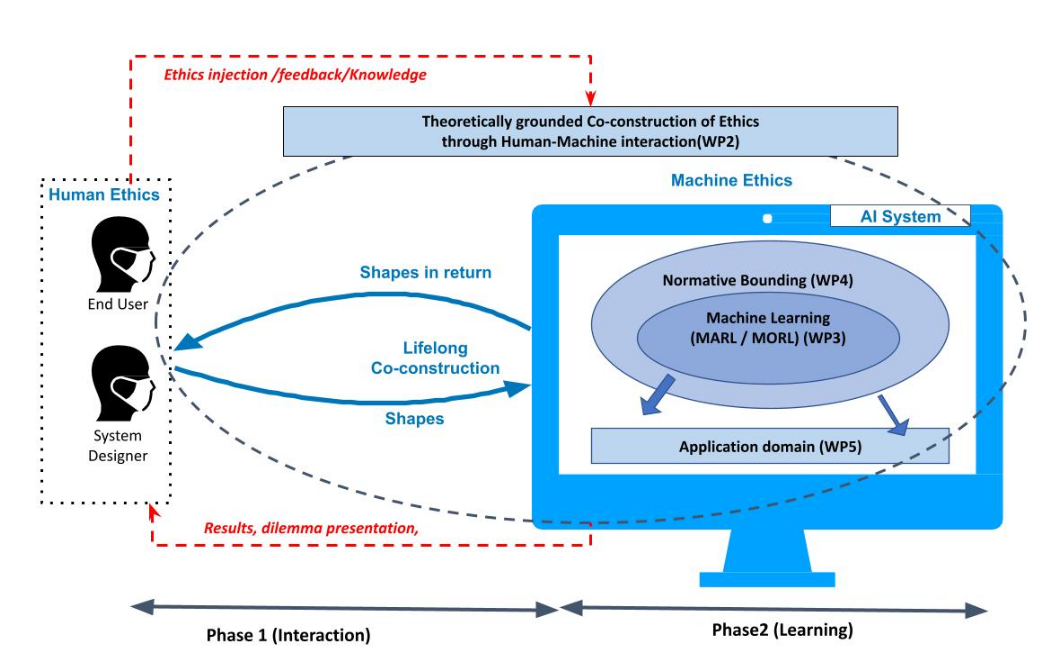

Acceler-AI (Adaptive Co-Construction of Ethics for LifElong tRrustworthy AI) is an ongoing research project funded by French Agency ANR, (2023-2027). The project's main goal is to enable the adaptive co-construction of Ethics in and for a long-lived intelligent system.

As many applications involving Artificial Intelligence (AI) may have a beneficial or harmful impact on humans, there is a societal and a research debate about the way to incorporate ethical capabilities into them. This urges AI researchers to develop more ethically-capable agents, shifting from ethics in design to ethics by design with “explicit ethical agents” able to produce ethical behaviour thanks to the integration of reasoning on and learning of ethics. To do so, one has to address non-functional requirements: diversity, to cope with the richness and non-monolithic approaches to ethics in human societies (e.g., knowledge, values); long-lived, to cope with the long-lasting evolution of intelligent systems and their running environment; trustworthiness, to be acceptable by society.

Hence, the project's main goal is to enable the adaptive co-construction of Ethics in and for a long-lived intelligent system addressing these requirements. This raises 3 challenges:

- Human-centric AI: how the system achieves its goal while following ethical principles and human values;

- Safe AI : how to ensure that the system operates within specified boundaries while being sufficiently autonomous to learn ethics and adapt in response to evolution of the context and objectives;

- Adaptive AI: AI systems should adapt both on a technical side (e.g., openness) and on a societal side (e.g., lifelong learning, shifting expectations and contemporary accepted moral values and norms).

Accelerer-AI proposes a multi-disciplinary research and adopts a human-centric perspective to investigate a co-construction of “ethics”. It proposes a systemic approach that dynamically couples 3 adaptive processes: 1) top-down injection of “human ethical preferences/values/moral rules” via continuous non-invasive human-agent interaction; 2) bottom-up lifelong learning and co-evolution (between humans and AI systems) of adaptive ethical behaviour; 3) normative regulation process that allows bounding the ethics learning process.