Overview

The proposed 3D mesh watermarking benchmark has three different components: a data set, a software tool and two evaluation protocols. The data set contains several "standard" mesh models on which we suggest to test the watermarking algorithms. The software tool integrates both geometric and perceptual measurements of the distortion induced by watermark embedding, and also the implementation of a variety of attacks on watermarked meshes. Besides, two different application-oriented evaluation protocols are proposed, which define the main steps to follow when conducting the evaluation experiments.

Paper

We have written a paper describing the proposed benchmark, which is included in the proceedings of the IEEE Shape Modeling International Conference 2010. If you would like to cite our paper, you may need this Bibtex.

It is strongly suggested that you read this paper before using our benchmark, at least the section "Evaluation Protocols" in order to know the steps to follow when benchmarking your method.

Data set

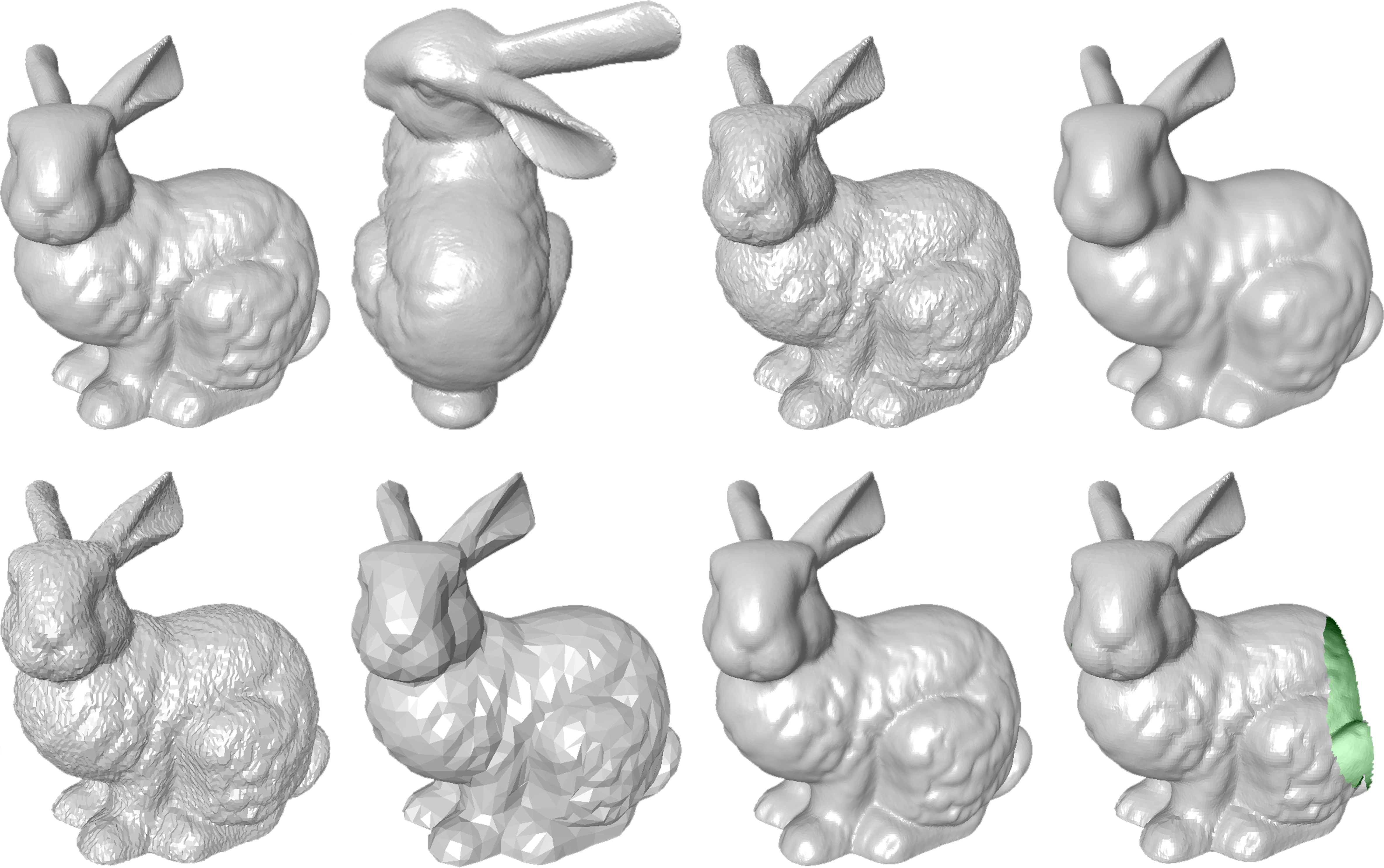

We have selected several representative meshes (with different numbers of vertices and different shape complexities) as the test models, and also acquired the permission to post them here. These models are:

|

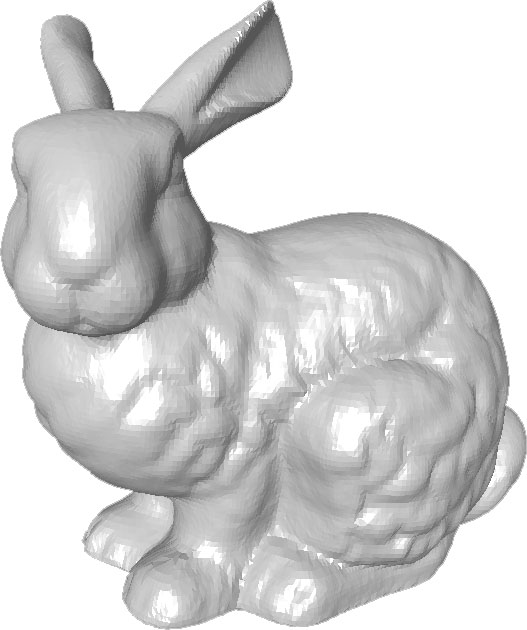

Bunny |

34835 vertices |

|

||

|

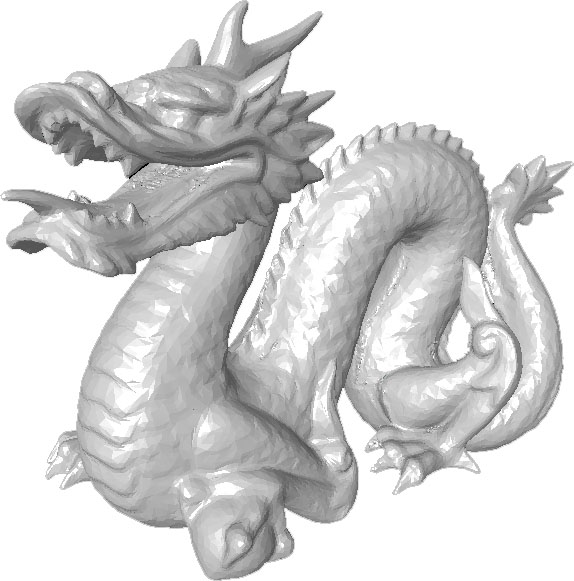

Dragon |

50000 vertices |

|

||

|

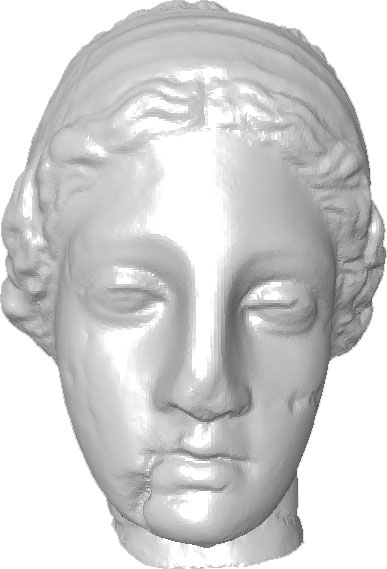

Venus |

100759 vertices |

|

||

|

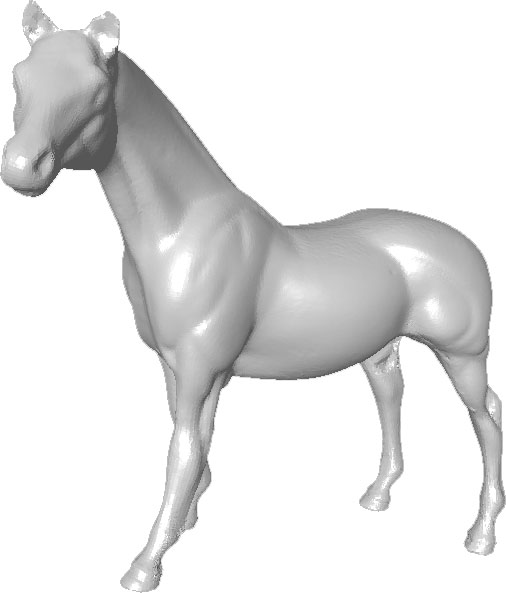

Horse |

112642 vertices |

|

||

| Rabbit |

70658 vertices |

|

||

| Ramesses | 826266 vertices |

|

||

| Cow | 2904 vertices |

|

||

| Hand | 36619 vertices |

|

||

| Casting | 5096 vertices |

|

||

|

Crank |

50012 vertices |

|

-

Notice: The Bunny and Dragon models posted here are the manifold/simplified versions of the original models that were created from scanning data by the Stanford Computer Graphics Laboratory. Please also be aware of that the Dragon is a symbol of Chinese culture. Therefore, keep your renderings and other uses of this model in good taste. Don't animate or morph it, don't apply Boolean operators to it, and don't simulate nasty things happening to it (like breaking, exploding, melting, etc.). Choose another model for these sorts of experiments. (You can do anything you want to the Stanford Bunny for example.) The Venus, Horse and Rabbit are the courtesies of the Cyberware Inc., and the Ramesses, Cow, Hand, Casting and Crank models are the courtesies of the AIM@SHAPE project.

Software tool

The software tool contains geometric and perceptual distortion measurement tools and the implementation of a variety of common attacks. You can download both the binary version and the Microsoft Visual Studio projects (source code) of this software, which was developed based on CGAL (Computational Geometry Algorithms Library). So, if you want to compile and build the MS VS projects, you should first download and install CGAL.

Evaluation protocols

Two application-oriented mesh watermarking evaluation protocols are proposed. The first protocol is perceptual-quality-oriented and the second one is geometric-quality-oriented. Please refer to the aforementioned paper for details about these two protocols. The default attack configuration file has been written and included in the software tool.

Note that the MSDM perceptual distance thresholds in the protocols are for the calculation where the radius parameter is equal to 0.005. In order to facilitate the verification of the constraint on the geometric distortion, we list here the diagonal lengths of the bounding boxes of the test models:

|

Bunny |

2.131131 |

|

Dragon |

2.364309 |

|

Venus |

2.753887 |

|

Horse |

2.104646 |

| Rabbit |

1.823454 |

| Ramesses | 1.853616 |

| Cow | 2.032768 |

| Hand | 2.157989 |

| Casting | 2.322810 |

|

Crank |

2.423323 |

Download

To sum up, you have to download the following items to use our benchmark:

-

The 10 mesh models (in .off or in .obj) on which you test the watermarking algorithm (all models in a single zip file);

-

The software tool (binary version or source code) to measure the distortion and to conduct attacks;

-

The paper which explains the proposed benchmark, particularly the evaluation protocols defining the steps to follow when benchmarking your method.

Results

The evaluation and comparison results of two recent methods on the Venus model can be found in this web page. More results (on other models and for other watermarking methods) will be added soon. Also, you are welcome to send us your test results if you would like to post them on our server.

Acknowledgements

We would like to thank Pr. M. Levoy for granting us the permission to post the Bunny and Dragon models on our public server. The Venus, Horse and Rabbit models are the courtesies of the Cyberware Inc., and the Ramesses, Cow, Hand, Casting and Crank models are the courtesies of the AIM@SHAPE project. We are also very grateful to Dr. P. Cignoni for allowing us to integrate the Metro tool in our benchmarking software.

Contact

The members of this project are: Kai Wang, Guillaume Lavoué, Florence Denis, Atilla Baskurt, and Xiyan He, mostly from the M2DisCo team of the LIRIS laboratory, CNRS, INSA-Lyon/Université Lyon 1 of France.

Please send email to us if you have any question, suggestion, comment or a bug to report. Your feedback is precious to us.

Last updated: October 1, 2010